The challenge of artificial intelligence is to convert society from being data driven to value driven.

During a recent training I attended on crisis management, our instructor — a dyed-in-the-wool artificial intelligence (AI) enthusiast — spent an inordinate amount of time praising ChatGPT.

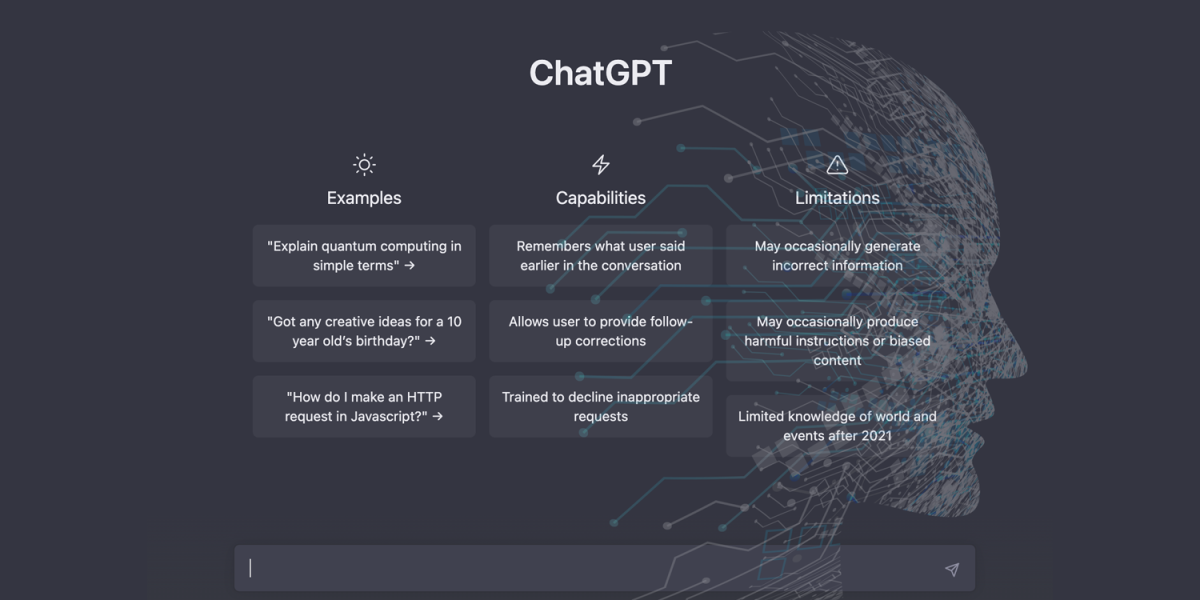

Not wanting to admit I had no idea what he was referring to, I sneakily looked it up on my phone. ChatGPT, or Chat Generative Pre-trained Transformer, is basically a chatbot, software used for online chat conversations, powered by AI and designed by OpenAI, a research laboratory in California, United States.

You know those often frustrating automated customer-service chats? They are operated by older chatbots — basically, by the great-grandparents of ChatGPT. Now picture this: rather than immense frustration, the chatbot provides you with strikingly human-like answers to any prompt. That is ChatGPT.

But there is more! ChatGPT can write computer programs and compose music. It can even write essays and poetry. Demonstrating both its simplicity to use and powerful outcome, our instructor gave us a demo. All he did was connect ChatGPT to Google Forms and asked it to write the index for a paper on “Crisis Management” and some paragraphs too. After waiting just a couple of a seconds, voilà! In front of us was a remarkably coherent and accurate text.

After I picked up my jaw from the floor, I asked what any author worth his/her soul would have asked: “What about copyright?” After all, ChatGPT was trained using a massive quantity of data from the internet (570 gigabytes of text, to be precise), some of which is copyrighted material. It turns out this is something of a gray area. At present, it is unclear if ChatGPT alters original works sufficiently to avoid copyright infringements (and if it does, whether this constitutes a true creation or merely “copyright laundering”). What’s clear is that OpenAI is not liable for damages.¹ So, if users are faced with a lawsuit, they are pretty much on their own.

Copyright implications aside, one thing is for sure: with also Google releasing its own chatbot named Bard, AI has become a part of our daily lives, now more than ever.

Some Implications for Education

Almost everyone in my family is a teacher. So, unsurprisingly, once the training was over, my mind went straight to implications for education. Picking up the phone, I called my twin sister, Inés, who is a headteacher at a bilingual school in Argentina.

We talked for a while about how AI will force educational institutions to rethink plagiarism. But soon we were navigating deeper waters, discussing assessment criteria and the core goals of education.

“I think AI developments will force us to focus even more on emotional literacy and critical thinking,” my sister said. “In a time when a computer can give you an answer in a nanosecond, our emphasis must be on discernment, not content regurgitation.”

Besides the potential for academic dishonesty,² one of the fears regarding ChatGPT-like technology is that it will be used to generate massive quantities of fake news. “Instead of one false report about a presumably stolen election, someone could quickly generate lots of unique reports and distribute them on social media to make it seem like different people are writing those reports,” Ulises Mejias, a communication professor at Oswego State University of New York and co-author of The Costs of Connection, said.³

What About the Church?

As a church, we have often taken the simplistic approach of demonizing new technologies when faced with complex scenarios such as this. I believe this is a terrible mistake. Not only is such technology not going to disappear (no matter how deep we bury our heads in the sand), but also, the longer we delay engagement, the more vulnerable we are to potential abuses and less able to impact society for good. After all, unless the salt mixes with the food, it cannot give it flavor!

“Demonizing technology is not the solution. We need to empower students to sift through the massive amounts of data they are exposed to every day,” my sister said. If they are to enjoy the benefits of new technologies and successfully avoid their pitfalls, “students should not complete formal education without becoming familiar with concepts like algorithmic bias, AI ethics, and misinformation. We need to furnish them with practical tools. For example, we should all know how to do a reverse image search to check if the picture that accompanies a post is original or not.”

Compassionate AI

Compassion is not a word often associated with AI. But I hope one day it will be. At least, it could be if we start teaching AI literacy and ethics. We can ensure that AI developments like ChatGPT significantly improve our lives and our society if we

- do not abdicate our rights as citizens

- are willing to write letters to politicians and educators alike

- push policies that ensure minority groups are considered when data is used to train algorithms

- update copyright laws

- demand that compassion and safety are built in by design, from the early stages of AI developments.

Compassionate AI is not only possible, as initiatives like Deep Empathy and Cogito show, but also absolutely necessary. Because, as AI scientist and author Amit Ray wisely points out, “The real challenge is how to digitize and incorporate the human values like compassion, forgiveness, bravery, morality, love and creativity in AI based systems.”4

I asked ChatGPT how we could ensure AI is used for the greater good of humanity. This was its answer:

Ensuring that AI is used for the greater good of humanity requires a combination of technical, legal, and ethical approaches. Here are some steps that can help:

Developing ethical guidelines: Ethical guidelines should be established to guide the development and deployment of AI systems, ensuring that they are aligned with human values and respect human rights. These guidelines should address issues such as privacy, accountability, transparency, and fairness.

Ensuring transparency: AI systems should be transparent and explainable, so that their decisions can be understood and evaluated by those affected by them. This will help build trust in the technology and ensure that it is used in a responsible and ethical manner.

Promoting diversity and inclusivity: AI systems should be developed and deployed in a manner that is inclusive and reflects the diversity of society. This will help ensure that the technology does not perpetuate existing biases and discrimination.

Establishing legal and regulatory frameworks: Governments should establish legal and regulatory frameworks that govern the use of AI and protect the rights and interests of citizens. These frameworks should hold AI systems and their developers accountable for their actions and ensure that they are used in a responsible manner.

Encouraging public participation and dialogue: There should be ongoing public engagement and dialogue about the development and deployment of AI, to ensure that the technology is used in a manner that is in the public interest.

By taking these steps, we can ensure that AI is used for the greater good of humanity and that its benefits are shared by all members of society.

The original version of this commentary was posted on the Trans-European Division news site.